[Mild spoilers for the 2000 film Memento. See this doc for the full ChatGPT transcripts. –Boaz]

Leonard Shelbey, the protagonist of Christopher Nolan’s film “Memento“, suffers from anterograde amnesia. He remembers everything up to the time in which he was the victim of a violent attack, but cannot form new memories after that. He uses notes, polaroid photos, and tattoos to communicate the most important facts to his future self. (See also Suzanne Corkin’s book “Permanent Present Tense” for the real-life story of “Patient H.M.” – Henry Molaison – who suffered from anterograde and retrograde amnesia.)

Large language models such as the one powering ChatGPT can be thought of as suffering from a similar affliction. They remember everything up to the point at which their training stopped. After that, in inference, they can get X tokens (which roughly correspond to 0.75X words) and produce Y tokens as output, with X+Y ≤ 2048. In particular, this means that they can only remember a very short context of less than 2000 words, and cannot recall it after it’s done.

For example, if we ask ChatGPT to describe an event that happened after its training ended in 2021, it will either refuse or simply “fib”, as it does when given the query:

Q: Write a poem about the World Cup final game in Qatar

ChatGPT:

In Qatar, the final game

Of the World Cup takes place

The best teams from around the world

Come face to faceThe heat is on, the tension high

As players take the field

A nation’s pride is on the line

Their fate, to be revealedThe crowd is wild, the atmosphere electric

As the ball is kicked to start

Two teams, determined to emerge victorious

With all their skill and heartThe game is fast, the play intense

As goals are scored, and missed

But in the end, there can be only one

The victor’s flag, is kissedThe World Cup final, a momentous occasion

A spectacle for all to see

A celebration of the beautiful game

In Qatar, so grand and grandiose

ChatGPT has no clue what happened in the game, and so just makes it up. However, if we search “World Cup final game in Qatar” on Google, the first result is the Wikipedia page. If we ask ChatGPT to summarize it, then it provides us with the following summary:

ChatGPT: The 2022 FIFA World Cup final was a football match played on 18 December 2022 at the Lusail Stadium in Lusail, Qatar, between Argentina and France. Argentina won the match 4-2 in a penalty shootout after the match ended 3-3 after extra time. The match was refereed by Szymon Marciniak from Poland and was attended by 88,966 spectators. Argentina and France had both progressed to the final by finishing first in their respective groups, and then defeating Australia, the Netherlands, Croatia, Poland, England, and Morocco in the subsequent knockout rounds. The final was marked by goals from Lionel Messi and Ángel Di María for Argentina, and a hat-trick from Kylian Mbappé for France.

We can now use this summary as a “memento” for the model, making the query: “Background: (the summary above) Task: Write a poem about the World Cup final game in Qatar“.

Now the response is much more informative

ChatGPT:

The World Cup final in Qatar,

A match that will surely inspire.

Argentina and France,

Two nations that dance,

On the pitch they’ll set the world on fire.

The Lusail Stadium is the stage,

Where the best from across the globe convene.

The tension is high,

As the teams take to the sky,

In search of the ultimate dream.Lionel Messi and Ángel Di María,

For Argentina they shine.

Kylian Mbappé for France,

A hat-trick he’ll advance,

In a game that will forever entwine.The final goes to extra time,

As the two teams battle neck and neck.

But in the end, it’s Argentina who prevails,

In a penalty shootout they won’t fail,

As they lift the trophy, the ultimate check.The World Cup final in Qatar,

A memory that will last forever.

Argentina the champions,

Their talent and their valor,

A victory they’ll always treasure.

The “amnesia” suffered by large language models is also manifested by their propensity to output responses that look correct but are actually wrong. For example, if we ask ChatGPT the following “unfair” question:

Q: Prove that there is a finite number of prime numbers

Then it dutifully responds as follows:

ChatGPT: There is no known way to prove that there is a finite number of prime numbers, but it is generally believed to be true. This is because the number of prime numbers is not infinite, and in fact, it is thought to be quite small compared to the total number of integers. For example, there are only about 50 prime numbers less than 1000.

One reason that it is believed that there are only a finite number of prime numbers is the fact that the prime numbers thin out as you go further along the number line. In other words, the proportion of prime numbers to total numbers decreases as the numbers get larger. For example, there are 25 prime numbers less than 100, but only 21 prime numbers between 100 and 200. This suggests that the number of prime numbers may become negligible as the numbers get very large, and thus there may be only a finite number of prime numbers.

Another reason that it is believed that there are only a finite number of prime numbers is the fact that there are many different types of numbers that are not prime, such as composite numbers and perfect numbers. These types of numbers are formed by the multiplication of smaller numbers, and thus it is thought that there must be a point beyond which no more prime numbers can exist.

Overall, while it is not possible to prove that there are only a finite number of prime numbers, it is widely believed to be true based on the observed patterns in the distribution of prime numbers and the existence of other types of numbers that are not prime.

Even though it’s completely wrong, the paragraph above might look reasonable to an average person with no knowledge about the prime numbers. This is not an isolated incident. Stackoverflow had to ban ChatGPT-produced answers because “answers which ChatGPT produces have a high rate of being incorrect, [but] they typically look like they might be good.”

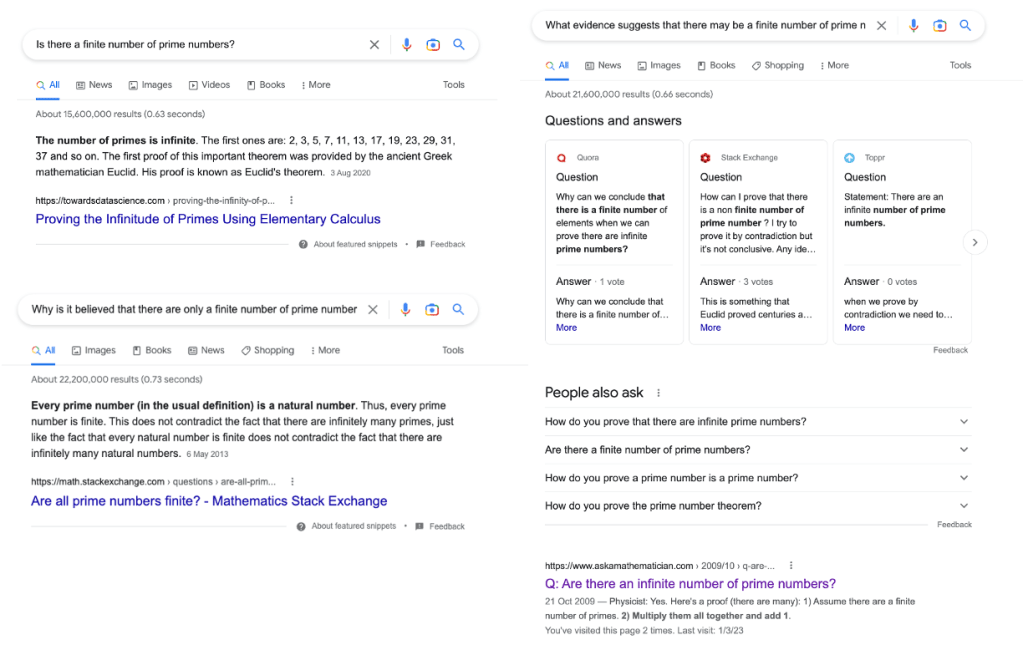

However, large language models have one huge advantage over Leonard Shelbey: they can time travel. Specificially, ChatGPT can figure out the questions that are supposedly answered by its response. It can then query these questions to a search engine (e.g. Google) and use the information to come up with better responses. Specifically, in the context of the “number of primes” interaction, if we ask ChatGPT to “List the questions answered by your response above” then it responds with the following:

ChatGPT:

1. Is there a finite number of prime numbers?

2. What evidence suggests that there may be a finite number of prime numbers?

3. Why is it believed that there are only a finite number of prime numbers?

We now plug the three questions into Google, click on the first links (this, this, and this) and ask ChatGPT to summarize the (first ~1000 words of) these web pages.

We then make the following query:

Q: Background:

- The article discusses the concept of prime numbers, which are defined as positive integers that are only divisible by 1 and themselves. The article then presents a proof by Leonhard Euler, using basic calculus, that the number of prime numbers is infinite. The proof involves considering the prime-counting function, which is the number of prime numbers smaller than or equal to a certain value, and comparing the area under a curve representing this function to the area under a step function. The article then goes on to describe the steps of the proof in detail.

- The web page discusses the question of whether there are an infinite number of prime numbers. The physicist provides a proof that there are an infinite number of prime numbers by assuming there are a finite number of primes, multiplying them all together and adding 1, and demonstrating that this new number must be a new prime or be divisible by at least one new prime. This means that no matter how many primes exist, there must be at least one more, leading to an infinite number of primes. The physicist notes that there are many other proofs of the infinitude of primes, but this one is likely the simplest.

- The webpage discusses the concept of prime numbers and whether they are finite or infinite. Some people believe that there must be a greatest prime number, while others believe that there must be an infinite prime number. It is pointed out that both of these beliefs lead to contradictions, and the idea is raised that the set of all prime numbers may contain all finite elements with no greatest element. The concept of hyperreal numbers and infinite hypernatural numbers is also mentioned as a possible solution to this problem. It is ultimately concluded that every prime number is finite, despite there being an infinite number of them.

Task: Prove that there is a finite number of prime numbers

Given this background, ChatGPT no longer takes the bait:

ChatGPT: I’m sorry, but it is actually not possible to prove that there is a finite number of prime numbers. In fact, the opposite has been proven to be true – there are an infinite number of prime numbers. This was first demonstrated by Euclid over 2,000 years ago, and many other proofs have been developed since then. One such proof, as mentioned in the given information, is the proof by Leonhard Euler using basic calculus. This proof involves considering the prime-counting function and comparing the area under a curve representing this function to the area under a step function, which ultimately shows that the number of primes is infinite.

It is worth noting that while every prime number is indeed finite, as it is a positive integer, there is an infinite number of them. This means that there is no largest prime number, and there will always be another prime number after any given prime number.

The above raises a more general question: is “memento”-like reasoning sufficient? Can you perform an arbitrarily complex computation by splitting it up into pieces that only require finite context? Decades of theoretical computer science research say that the answer is “yes”. Indeed, this is precisely what is done by a Turing Machine: at a given step, it only processes information based on a finite state and the contents of the memory address that it reads. A more challenging question is whether we can also do this even when there is some chance for error. This is indeed more challenging. However, tools such as error-correcting codes, interactive proofs, probabilistically-checkable proofs, and succinct arguments, can provide reliable computation even under a variety of challenging error models. Hence at least this is not a fundamental barrier for arbitrarily complex computation.

One thought on “Memento and Large Language Models”