[These are my own opinions, and not representing OpenAI. Crossposted on Lesswrong] AI has so many applications, and AI companies have limited resources and attention span. Hence if it was up to me, I’d prefer we focus on applications that are purely beneficial— science, healthcare, education — or even commercial, before working on anything related … Continue reading Mass surveillance, red lines, and a crazy weekend

Trevisan Award for Expository Work

[Guest post by Salil Vadhan; Boaz's note: I am thrilled to see this award. Luca's expository writing on his blog, surveys, and lecture notes was and is an amazing resource. Luca had a knack for explaining some of the most difficult topics in intuitive ways that cut to their heart. I hope it will inspire … Continue reading Trevisan Award for Expository Work

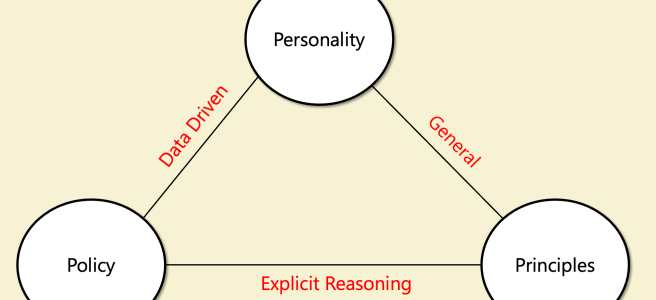

Thoughts on Claude’s Constitution

[I work on the alignment team at OpenAI. However, these are my personal thoughts, and do not reflect those of OpenAI. Crossposted on LessWrong] I have read with great interest Claude’s new constitution. It is a remarkable document which I recommend reading. It seems natural to compare this constitution to OpenAI’s Model Spec, but while the documents … Continue reading Thoughts on Claude’s Constitution

TheoryFest 2026 Call for Workshops (guest post by Mary Wooters)

TheoryFest 2026 will hold workshops during the STOC 2026 conference week, June 22–26, 2026, in Salt Lake City, Utah, USA. We invite groups of interested researchers to submit workshop proposals! See here for more details: https://acm-stoc.org/stoc2026/callforworkshops.html Submission deadline: March 6, 2026

Thoughts by a non-economist on AI and economics

Crossposted on lesswrong Modern humans first emerged about 100,000 years ago. For the next 99,800 years or so, nothing happened. Well, not quite nothing. There were wars, political intrigue, the invention of agriculture -- but none of that stuff had much effect on the quality of people's lives. Almost everyone lived on the modern equivalent … Continue reading Thoughts by a non-economist on AI and economics

CS 2881: AI Safety

The webpage for my AI safety course is on https://boazbk.github.io/mltheoryseminar/ including homework zero, video of first lecture and slides. Future blog posts related to this course will be posted on lesswrong since many people interested in AI safety visit that. Video of first lecture https://youtu.be/-NCiWaRS6So Some snapshots from slides:

AI Safety Course Intro Blog

I am teaching CS 2881: AI Safety this fall at Harvard. This blog is primarily aimed at students at Harvard or MIT (where we have a cross-registering agreement) who are considering taking the course. However, it may be of interest to others as well. For more of my thoughts on AI safety, see the blogs … Continue reading AI Safety Course Intro Blog

Machines of Faithful Obedience

[Crossposted on LessWrong] Throughout history, technological and scientific advances have had both good and ill effects, but their overall impact has been overwhelmingly positive. Thanks to scientific progress, most people on earth live longer, healthier, and better than they did centuries or even decades ago. I believe that AI (including AGI and ASI) can do … Continue reading Machines of Faithful Obedience

Trevisan prize (guest post by Alon Rosen)

The Trevisan Prize for outstanding work in the Theory of Computing is sponsored by the Department of Computing Sciences at Bocconi University and the Italian Academy of Sciences. The prize is named in honor of Luca Trevisan in recognition of his major contributions to the Theory of Computing. It aims to recognize outstanding work in the field, and to broaden the reach … Continue reading Trevisan prize (guest post by Alon Rosen)

Call for papers Information-Theoretic Crpytography

The sixth Information-Theoretic Cryptography (ITC) conference will be held at UC Santa Barbara, California, on August 16-17, 2025. The conference is affiliated with CRYPTO 2025, and will take place in the same location just before CRYPTO. Information-theoretic cryptography deals with the design and implementation of cryptographic protocols and primitives with unconditional security guarantees and the usage … Continue reading Call for papers Information-Theoretic Crpytography